Principles of speech recognition¶

The essence of speech recognition is to convert a speech sequence into a text sequence. The commonly used system framework is as follows:

In order to facilitate the overall understanding, firstly, the related technologies of speech front-end signal processing are introduced. Then, the basic principles of speech recognition are explained, and the description of acoustic models and language models are expanded.

I. Speech Preprocessing¶

The signal processing at the front end is the relevant processing of the original speech signal, so that the processed signal can better represent the essential characteristics of the speech. The relevant technical points are described in the following table:

1. Voice endpoint detection¶

Voice endpoint detection (VAD) is used to detect the starting position of the voice signal and separate the voice segment from the non-voice (silence or noise) segment. VAD algorithms can be broadly classified into three categories: threshold-based VAD, classifier-based VAD, and model-based VAD.

-

The threshold-based VAD distinguishes speech from non-speech by extracting the features of time domain (short-time energy, short-time zero-crossing rate, etc.) Or frequency domain (MFCC, spectral entropy, etc.) And setting reasonable thresholds;

-

Classification-based VAD takes speech activity detection as two classifications (speech and non-speech), and classifiers can be trained by machine learning methods to achieve the purpose of speech activity detection;

-

Model-based VAD is to build a complete set of speech recognition models to distinguish speech segments and non-speech segments. Considering the real-time requirements, it has not been applied in practice.

2. Noise reduction¶

In the living environment, there are usually various noises such as air conditioners, fans and so on. The purpose of noise reduction algorithm is to reduce the noise in the environment, improve the signal-to-noise ratio, and further enhance the recognition effect.

Commonly used noise reduction algorithms include adaptive LMS and Wiener filtering.

3. Echo cancellation¶

When echo is present in duplex mode, the microphone picks up the signal from the speaker, such as when the device is playing music and needs to be controlled by voice.

Echo cancellation is usually achieved by using adaptive filter, that is, designing a filter with adjustable parameters, adjusting the filter parameters through adaptive algorithms (LMS, NLMS, etc.), simulating the channel environment of echo generation, and then estimating the echo signal for cancellation.

4. Reverberation elimination¶

The speech signal is collected by the microphone after multiple reflections in the room, and the reverberation signal obtained is easy to produce masking effect, which will lead to a sharp deterioration of the recognition rate and need to be processed in the front-end.

Reverberation cancellation methods mainly include inverse filtering-based method, beamforming-based method and deep learning-based method.

5. Sound source positioning¶

Microphone array has been widely used in the field of speech recognition, and sound source localization is one of the main tasks of array signal processing. Using microphone array to determine the speaker’s position is prepared for beamforming processing in the recognition stage.

Commonly used algorithms for sound source localization include high-resolution spectral estimation algorithm (such as MUSIC algorithm), time difference of arrival (TDOA) algorithm, minimum variance distortionless response (MVDR) algorithm based on beamforming, etc.

6. Beamforming¶

Beamforming is a method of forming spatial directivity by processing (such as weighting, time delay, summation, etc.) The output signals of each microphone of a microphone array arranged in a certain geometric structure, which can be used for sound source localization and reverberation elimination.

Beamforming is mainly divided into fixed beamforming, adaptive beamforming and post-filtering beamforming.

II. Basic principles of speech recognition¶

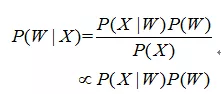

Speech recognition is to convert a speech signal into corresponding text information. The system mainly consists of four parts: feature extraction, acoustic model, language model, dictionary and decoding. In order to extract the features more effectively, it is often necessary to filter and frame the collected sound signal to extract the signal to be analyzed from the original signal; After that, the feature extraction work converts the sound signal from the time domain to the frequency domain, and provides appropriate feature vectors for the acoustic model; in the acoustic model, the score of each feature vector on the acoustic features is calculated according to the acoustic characteristics; and in the language model, the probability of the sound signal corresponding to a possible phrase sequence is calculated according to a theory related to linguistics; Finally, according to the existing dictionary, the phrase sequence is decoded to obtain the final possible text representation. The relationship between the acoustic model and the language model is expressed by the Bayesian formula as follows:

where P(X|W) is called the acoustic model and P(W) is called the language model. Most researches deal with acoustic model and language model separately, and the difference of acoustic model is the main feature of different speech recognition systems. In addition, end-to-end (Seq-to-Seq) approaches based on big data and deep learning are evolving, which compute P(X) directly|W), i.e. the acoustic model and the language model are treated as a whole.

III. Traditional HMM acoustic model¶

The acoustic model is to relate the observed features of the speech signal to the speech modeling units of the sentence, that is, to calculate P (X | W). We usually use Hidden Markov Model (HMM) to solve the indefinite length relationship between speech and text, such as the Hidden Markov Model in the following figure.

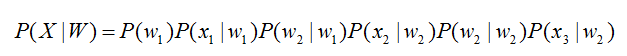

The acoustic model is represented as:

Among, The initial state probability P(w1) and the state transition probabilities (P(w2|w1)、P(w2|w2)) can be calculated by a conventional statistical method, The transmission probabilities (P(x1|w1)、P(wx2|w2)、P(x3|w2)) can be solved by Gaussian mixture model GMM or deep neural network DNN.

The traditional speech recognition system generally adopts the acoustic model based on GMM-HMM. The schematic diagram is as follows:

Where awiwj denotes the state transition probability P(wj|wi), The speech features are represented as X=[x1,x2,x3,…], and the relationship between the features and the States is established through the Gaussian mixture model GMM. Thus, the emission probability P(xj|wi) is obtained, and different wi States correspond to different parameters of the Gaussian mixture model.

Speech recognition based on GMM-HMM can only learn the shallow features of speech, but cannot obtain the high-order correlation between data features. DNN-HMM can improve the recognition performance by using the strong learning ability of DNN. The schematic diagram of its acoustic model is as follows:

The difference between GMM-HMM and DNN-HMM is that DNN is used to replace GMM to solve the transmission probability P(xj|wi), and the advantage of GMM-HMM model is that the calculation amount is small and the effect is not bad. DNN-HMM model improves the recognition rate, but it requires high computing power of hardware. Therefore, the selection of the model can be adjusted according to the actual application.

Seq-to-Seq model¶

Speech recognition can actually be seen as a problem of switching between two sequences. The actual goal of speech recognition is to transcribe an input audio sequence into a corresponding text sequence. The audio sequence can be described as O = O1, O2, O3.., ot, where oi represents the speech features of each frame and t represents the time step of the audio sequence (usually, each second of speech is divided into 100 frames. Each frame can extract 39-dimensional or 120-dimensional features.) Similarly, the text sequence can be described as W = w1, W2, W3.., wt, where n represents the number of corresponding words in the speech (not necessarily words, but also other modeling units such as phonemes). It follows that the speech recognition problem can be modeled by a sequence-to-sequence model. The traditional speech recognition problem is a hybrid structure of DNN-HMM, and it also needs multiple components such as language model, pronunciation dictionary and decoder to model together. The construction of the pronunciation dictionary requires a lot of expert knowledge, and multiple model components need to be trained separately, so they can not be optimized jointly. Seq2Seq model provides a new solution for speech recognition modeling. Applying Seq2Seq model to speech recognition problems has many obvious advantages: end-to-end joint optimization, completely getting rid of Markov assumption, and no need for pronunciation dictionary.

CTC¶

In speech recognition, there are usually speech segments and corresponding text labels, but we do not know the specific alignment relationship, that is, the alignment between characters and speech frames, which brings difficulties to speech recognition training tasks; CTC does not care about the specific and unique alignment relationship when training, but considers the probability sum of all possible sequences corresponding to labels, so it is more suitable for this type of recognition task. CTC decodes synchronously with the acoustic feature sequence, that is, every time a feature is input, a label is output, so its input and output sequence lengths are the same. But we said earlier that the length of input and output is obviously very different, so a blank symbol is introduced in CTC, and the text sequence with blank is called an alignment result of CTC. After getting the alignment, first de-duplicate the symbol, then delete the blank, and then restore the text of the annotation.

RNN Transducer¶

CTC has brought great benefits to the acoustic modeling of speech recognition, but there are still many problems in CTC models, the most significant of which is that CTC assumes that the outputs of the model are conditionally independent. There is a certain degree of deviation between this basic assumption and the speech recognition task. In addition, the CTC model does not have the ability of language modeling, and it does not really achieve end-to-end joint optimization. RNN-T is no longer a structure with one input corresponding to one output, but enables it to generate multiple token outputs for one input until one null character is output to indicate that the next input is required, that is to say, the final

number must be the same as the length of the input. Because each frame of input must generate one

.

V. Language Model¶

The language model is related to text processing. For example, we use the intelligent input method. When we input “nihao”, the candidate words of the input method will appear “hello” instead of “nihao”. The candidate words are arranged according to the order of the language model score.

The language model in speech recognition is also used to process the word sequence, which combines the output of the acoustic model and gives the word sequence with the maximum probability as the result of speech recognition. Because the language model represents the probability of occurrence of a sequence of words, it is generally represented by the chain rule, for example, W is composed of w1,w2,…wn, then P (W) can be expressed by the conditional probability correlation formula:

Because the condition is too long, it is difficult to estimate the probability. The common practice is to consider that the probability distribution of each word depends only on the first few words. Such a language model is called an n-gram model. In the n-gram model, the probability distribution of each word depends only on the previous n-1 words. For example, in the trigram (n = 3) model, the above formula can be simplified: